Building neural nets in Minecraft

This blog explains my thoughts behind creation of my project called scarpet-nn which allows you to implement binarized neural nets in Minecraft.

I think neural network in Minecraft is a cool idea because it opens us to so many new possibilities in-game. Think of custom maps where mapmakers can hide a secret passage or rooms which are accessible only when you draw certain pattern on a wall and the in-game neural network recognizing it ! We can have games where each stage completion computes output of a layer of your neural network and so if you complete the stages in wrong order, you obviously get wrong answers. We can have a multiplayer game where one team modifies the neural network activations to change the output of neural network while the other team changes the weights of the neural network to make sure output does not change. More than this, I think just visualizing the neural network calculating results block by block in Minecraft would be very satisfying to watch too !

Am I the first to think of this ?

No, ofcourse not. There’s always someone who has thought of the same thing before you (ALWAYS)

On some quick googling, I found an implementation of an MNIST ConvNet in Minecraft. It was decently fast, but required a ton of memory, wasn’t server friendly at all and used more than 2 million (!!!) command blocks to implement it. This meant that if some custom map creator wanted to use this in their server, they would need compute comparable to a dedicated data center to run the server smoothly. Moreover, it also doesn’t offer us flexibility to reconfigure the neural network, which means if you wanted to implement some new neural network, you would need to rewrite/regenerate all those millions of command blocks. Plus, there is no nice block-by-block visualization possibilities in this.

Design

I decided to limit my scope for implementing only conv and fully connected layers (for now). These layers are easy to implement since they only need multiplication and addition operations. My initial idea was to take a CPU designed in Minecraft (such as this amazing 32 bit FPU), take a memory designed in Minecraft (like this video), connect them using some redstone magic and then finally build a redstone circuit that properly reads the model from memory, executes it and stores the result back in memory.

However, this was a hell lot more tedious than I initially imagined. The design of memory wasn’t fully scalable, this would mean that users would need to rewire the memory to CPU which isn’t exactly nice considering design principles. But most importantly, the FPU or CPU just wasn’t fast enough. Each operation on that CPU or FPU took about 1.5-2s to calculate on my decently fast CPU. This was obvious due to limited in-game capabilities. Note when I rant about 1.5-2s for computing answer using the FPU, it’s only in context of neural networks because they have thousands of these operations. From an electrical engineering standpoint, the FPU I am referring to is very elegantly designed.

So after this I thought of quantization of neural network weights to use less bits to represent each number in weights and activations of the nerual net. Better yet, I opted for binarized neural networks. These use only a single bit for representing every number in computation of neural networks. Because of this, multiplication and addition operations become a lot easier.

Binarized Multiplication

Following table describes the multiplication of 2 weights or activations when binarized to +1 or -1.

| $$A$$ | $$B$$ | $$C = A * B$$ |

|---|---|---|

| -1 | -1 | 1 |

| -1 | 1 | -1 |

| 1 | -1 | -1 |

| 1 | 1 | 1 |

In bit representation, this multiplication resembles XNOR gate.

| $$A$$ | $$B$$ | $$C = A * B$$ | $$C =\overline{A \oplus B}$$ |

|---|---|---|---|

| 0 | 0 | 1 | 1 |

| 0 | 1 | 0 | 0 |

| 1 | 0 | 0 | 0 |

| 1 | 1 | 1 | 1 |

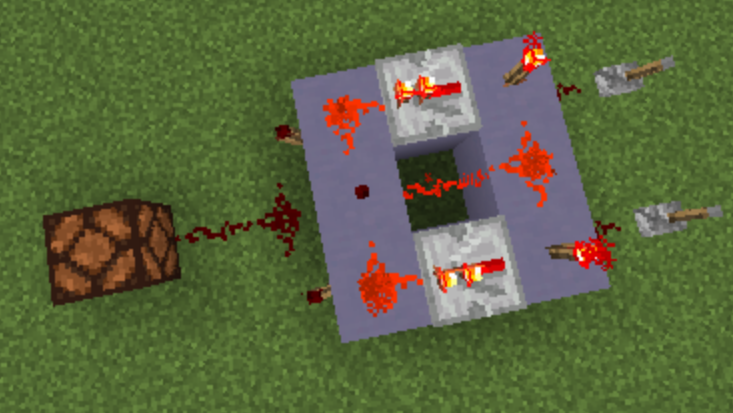

This means that, in BNNs multiplication operation reduces to simple XNOR as opposed to complicated floating point multiplication algorithm. We can do XNOR operation in Minecraft as follows: (based on this very compact design)

Binarized Reduce/Addition

Reduce operation typically refers to adding up all elements of a tensor along some specific direction. In case of BNNs, since all the operands are either +1 or -1, we only need sign of the answer as the output of reduction operation. In bit representation, this translates to bitcounting operation. Therefore, to reduce a vector of length \(l\) of 1s and 0s, we only need to count number of 1s in the vector in some variable say bitcount. Then, output of reduction operation is bit 1 (+1) if \((bitcount \geq l/2)\) and bit 0(-1) otherwise.

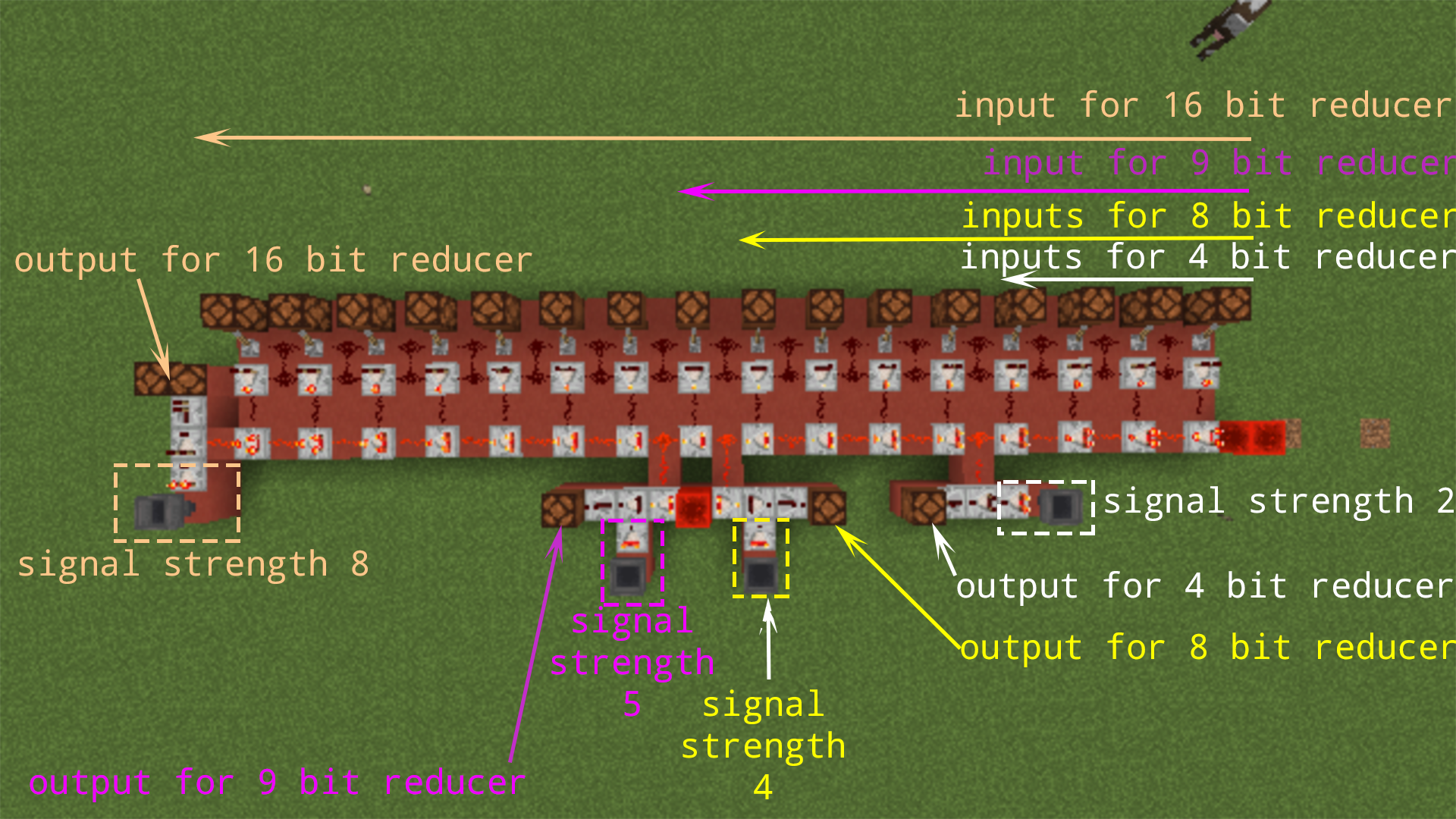

I couldn’t find a Minecraft version of this so I decided to design my own as follows:

This reducer architecture unfortunately cannot work for more than 16 bits. That being said I’m not a big redstone guy and I can’t even make a 4x4 flush piston door without looking up a tutorial. Maybe someone with deeper understanding of redstone mechanics can do a much better job at this.

Scarpet - A programming language in Minecraft

While the reducer I designed will work for small neural networks (only 3x3 conv filters, not more than 16 in any dimension etc.), I wanted to support more general and bigger neural networks. Scarpet is an in-game, functional-like programming language developed by gnembon. While scarpet requires carpetmod (which means our neural networks won’t be pure vanilla Minecraft-y), it is an excellent choice for building neural nets because it is server friendly and has a nifty game_tick() function. game_tick() allows you to essentially pause the game for specified amount of time. This means our block by block visualization requirement is also fulfilled. gnembon does an excellent job at explaining the language with the documentation of scarpet.

Representation of Neural Nets

With scarpet as our tool, we need to focus on representating binarized neural networks in Minecraft. We can set 2 different blocks for representing \(+1\) and \(-1\) in weights/activations. Moreover, I also thought it would be nice to be able to convert my PyTorch binarized neural network into my Minecraft representation. Therefore, I decided to generate a litematica schematic for every layer of my neural network. Litematica allows you to put a schematic of block arrangements anywhere in the world, this schematic is extremely handy to recreate the block arrangement in the world.

This means we need a program that can take in our PyTorch model and output a litematica schematic (the .litematica file) for every layer for us to build in our world. Unfortunately, the .litematica format isn’t very well documented. Thankfully with the help of the developer maruohon and the NBT data exploration library I was able to write to the .litematica file properly. The code for this module is available in the nn-to-litematica folder on my scarpet-nn repo. (note that nn-to-litematica currently supports only conv and fc layers without bias)

I have written more about the litematica schematic generation rules in the documentation of scarpet-nn. With this much progress, it was just a matter of time to write some neat, modularized code for scarpet apps that implement our intended functionality.

Results

After fixing up a representation, I was able to build and run the neural network in my Minecraft world. But it was extremely boring to modify input block by block. To solve this, I decided to write a scarpet app called drawingboard that lets you draw at the input layer by right clicking on it with any sword. I implemented a small binary image classifier that could detect digits 0 and 1 from the MNIST dataset.

See the working classifier demo:

Block by block visualization

So this is where I end my blog. Feel free to reach me for suggestions/comments/questions/your own neural networks built in Minecraft. I would love to hear from you!